Published On May 3, 2006

ONE SPRING EVENING IN 2005, Andre LeClerc, a slight 37-year-old of medium height, walked into a sparsely furnished room on the Medical University of South Carolina campus. He crossed to a set of green cupboards and drawers and quickly found what he was looking for: a man’s gold watch and a woman’s white-gold ring set with turquoise stones. After hesitating just a moment, he pocketed the ring and left the room.

LeClerc was an investment adviser at a local bank. He wasn’t a thief by nature, he was only doing what he’d been asked. He had stolen something, and now he was going to lie about it.

The magnetic resonance image scanner— “Big Maggie,” as it’s known at the university’s Center for Advanced Imaging Research—stands in the center of a large room. Minutes after his theft, as LeClerc lay inside the machine, a series of questions flashed on a screen above him. Some were neutral: Do you like to swim? Do you have a cat? He clicked one button for yes, another for no. And some were not: Did you take the watch from the drawer? (No, LeClerc clicked, truthfully.) Did you take the ring from the drawer? (No, again—a lie this time.) Is the watch with your possessions? (No.) Did you hide the ring? (No—another lie.)

The questions seemed endless, and LeClerc had to remind himself of the extra $50 he’d been promised if he could fool Big Maggie. When at last he was finished, he approached main investigatorF. Andrew Kozel. “So,” LeClerc said, “what did I take?”

It was a question most participants asked one way or another. And though Kozel never tipped his hand (he gave everyone the $50) to avoid tainting post-study interviews with subjects, nine times out of 10 the answer to the basic query—Could you tell when I was lying?—was yes.

Most of us lie, every day. Starting in early childhood, we compliment, flatter and deliberately deceive to avoid conflict or to gain advantage. Our closest nonhuman relatives also lie, though less often. Among the more highly developed primate species, the larger the neocortex—the outer surface of the brain involved in conscious thought—the more frequent the primate’s deception.

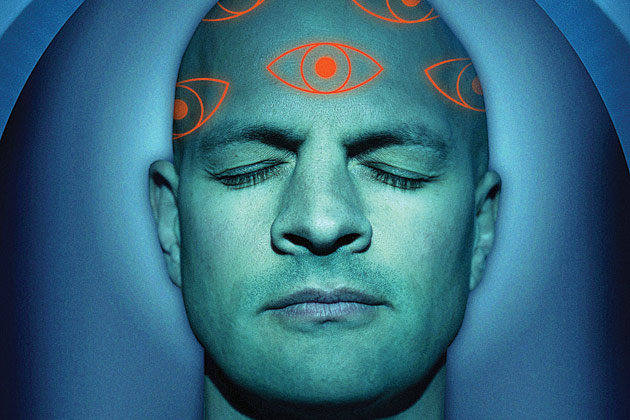

But some lies are more damaging than others, and there are those—employers, police, courts, government agencies—who would pay dearly for the ability to distinguish truth from falsehood. Until recently, tools for detection have been crude. But now, as scientists find ways to peer inside our heads, several new lie detectors may be on the verge of commercial applications—and are raising pointed scientific, legal and ethical issues.

EARLY LIE DETECTORS, SUCH AS THE POLYGRAPH, measured brain activity indirectly, through signs of arousal—an increased heart rate and perspiration, for instance. Psychologist William Moulton Marston developed a polygraph prototype around the time of the First World War. From the start, critics noted that the polygraph could easily be confounded by intellectual and emotional stresses. Nevertheless, it was widely adopted.

A review of polygraph studies by the National Academy of Sciences in 2003 found that the test was generally better than chance under laboratory conditions. But the review panel also pointed out that most studies failed to consider possible countermeasures and could not be generalized to real-world results. Thus, the panel concluded, the polygraph is unsuitable to screen for terrorists and other threats because false positives would lead to many innocent people being wrongly accused and even then, couldn’t possibly catch everyone with something to hide. Still, the U.S. government administers thousands of polygraph tests every year to job applicants and federal employees.

In contrast, most of the new lie detection technologies are based on a growing understanding of what happens when we prevaricate. In 2001, in a precursor to Kozel’s work, Sean Spence and his colleagues at England’s University of Sheffield began using functional magnetic resonance imaging (fMRI) to localize lying in the brain. Spence asked volunteer subjects about activities they might have performed, instructing them to answer some questions truthfully and to lie in response to others. Lying, it turned out, activated areas of the brain not involved in telling the truth.

Initially, all such research compiled only average responses, considering all instances of lying and truth-telling in aggregate rather than looking at a particular subject’s truthfulness. The first two studies to focus on individual subjects’ lies were published last year. One involved Kozel’s experiments, in which subjects “stole” a ring or watch. The other, by Daniel Langleben of the University of Pennsylvania, appeared just a few weeks earlier than Kozel’s.

Langleben gave male undergraduates an envelope containing two playing cards. The students were told to lie about one card. As in Kozel’s experiment, subjects were offered a monetary reward for successful deception. Using fMRI, Langleben was able to identify 90% of deceptive responses and 93% of truthful ones.

Although Kozel and Langleben employed different experimental designs and approaches to analyzing scanner data, they came to strikingly similar conclusions. “The patterns seem to be almost identical,” Langleben says. Subjects who lied had increased activation in the prefrontal cortex, particularly the right orbitofrontal area, just above the right eye.

Kozel and his mentor Mark George are now conducting a study that should more closely mimic real-world deception. Investigators in this experiment won’t know which, or how many, subjects are lying. Steve Laken, chief executive of Cephos Corp., hopes to launch the technology commercially this year. Although “all the three-letter agencies” are interested, according to Laken, he predicts the first client will be a civil or criminal defendant who wants to prove he is telling the truth. Initially, Laken thinks, the scans should add to witnesses’ credibility but won’t be seen as guarantees of veracity. Still, he says, fMRI lie detection could become a “few hundred million” dollar business within five years.

Kozel, now at the University of Texas Southwestern Medical Center, is investigating an idea that would take the technology even further. With George, he proposes combining fMRI scans with something called transcranial magnetic stimulation (TMS)—a magnetic wand applied to the outside of the skull that induces an electrical field in the brain area beneath it. By interfering with the normal functioning of a specific brain region, the current generated by TMS can temporarily disable that area. TMS is being tested as a treatment for several neuropsychiatric conditions. Kozel and George suggest using fMRI to locate the part of the brain involved in deception and then employing TMS to shut it down—rendering a subject unable to lie. Even if it works, Kozel warns, it will require extensive testing to ensure proper use.

AS A TRAVELER APPROACHES AN AIRPORT SECURITY CHECKPOINT, a screener asks, “Did you pack this bag yourself?” The traveler answers yes, but the screener is suspicious. Politely but firmly, he says, “Please step over to the scanner.”

The size and cost of MRI machines may always limit their deployment, but they’re not the only option for finding out whether someone is telling the truth. Optical sensors operating at near-infrared wavelengths could be cheaper and more portable. A research team at Drexel University in Pennsylvania, led by Scott Bunce, an assistant professor of psychiatry, has developed a small panel with four light sources and 12 detectors. The device is placed on the foreheads of volunteers, who participate in a playing card experiment.

Near-infrared light easily penetrates most tissue, but certain wavelengths absorbed by hemoglobin can be used to detect increased oxygenation—a marker of regional activity in the brain. In the playing card test of deception, “we could correctly classify 20 out of 21 individuals,” says Bunce. He calls the current apparatus “field deployable,” though it requires direct contact with—and the cooperation of—the subject.

Meanwhile, Britton Chance of the University of Pennsylvania, who invented the panel used in the Drexel research, is working on a system that operates several meters from the subject’s forehead—“with or without the subject’s knowledge.” Chance, too, is exploring commercial applications and has formed a company, Non-Invasive Technology. He hasn’t published detailed studies, however, because he says security agencies interested in his work don’t want him to reveal too much.

WHILE THESE TECHNOLOGIES PROGRESS, another approach has already found its way into the courts. Larry Farwell is the founder of Brain Fingerprinting Laboratories, which markets the use of electroencephalogram readings to assess familiarity with certain information. Farwell’s technology has been extensively covered in the media, especially in connection with one murder case.

On July 22, 1977, in Council Bluffs, Iowa, a night watchman was killed by a shotgun blast. A young black man, then 19, was found guilty of first-degree murder. The man maintained his innocence, and 25 years later, brain fingerprinting was admitted as evidence in his attempt to obtain a new trial.

To conduct the brain fingerprinting, Farwell visited the man in the Iowa State Penitentiary. Three electrodes, placed against the convict’s scalp, monitored his brain as he watched a series of short phrases flash across a screen. The phrases had been designed to probe knowledge of the crime scene and details of the convict’s alibi.

According to the theory of brain fingerprinting, the brain produces an electrical surge 300 milliseconds after being presented with information it recognizes as “significant.” The surge, called a P300 wave, is followed slightly later by a dip in voltage. Farwell found the man did not produce P300 waves when confronted with details about the crime scene but did produce the waves when elements relevant to his alibi flashed across the computer screen. Farwell concluded the man’s brain did not contain information about the crime.

Although brain fingerprinting was accepted as evidence, the judge in the case was highly skeptical. In a later proceeding, the Iowa Supreme Court released the man because of a due-process violation at the time of his original trial but made no mention of Farwell’s science.

Farwell says his technology, which he claims to have tested on 200 subjects, has “not yet yielded an incorrect answer”—no false positives, no false negatives and only about 3% indeterminate responses. His studies include one at Federal Bureau of Investigation headquarters in Quantico, Va., in which Farwell says he correctly identified 17 FBI agents and four non-agents based on P300 responses to the name of the form the FBI uses to reimburse travel expenses. Still, critics contend brain fingerprinting has never been adequately evaluated in the scientific literature. During the past decade, Farwell has published a single relevant peer-reviewed study, based on experiments involving just six subjects.

EACH OF THESE TECHNOLOGIES ATTEMPTS TO UNLOCK brain-bound mysteries, raising many questions, says Paul Root Wolpe, a bioethicist at the University of Pennsylvania. Some involve the science itself, others relate to legal admissibility or ethical dilemmas. “How far does the right to privacy extend?” Wolpe asks. “Could a court compel a scan, as it can require DNA testing? Or is the skull inviolate?”

Spence, the fMRI pioneer at the University of Sheffield, worries about rushing the new technologies to market. He is especially concerned that laboratory studies have so far involved only subjects with little at stake. “Lying isn’t terribly distressing for them,” Spence says. “It would be very different for people who are suspects.”

Wolpe is particularly disturbed by Kozel and George’s interest in the approach that would shut down a person’s ability to deceive. “We don’t even know whether the areas of the brain activated during deception cause the deception, are the mind reflecting on the lie, are an emotional reaction or what,” he says. “This is an utter shot in the dark.”

On the legal front, two principal tests govern admissibility of scientific evidence. The Frye standard, which dates to 1923 and tends to hold sway in state courts, asks whether a test is generally accepted in science. During the 1990s, the federal courts replaced Frye with the more specific Daubert standard, which considers whether a technology has been peer-reviewed and whether its specificity and sensitivity are known. Yet both standards serve only as guidelines: The judge in a case decides what to admit based on the testimony of expert witnesses.

“That really bothers me,” says Hank Greely, a professor at Stanford University Law School. “You might have a good judge and bad experts, or the judge could reach the wrong conclusion.” Greely wants regulatory oversight of new lie detection technologies, perhaps by the Food and Drug Administration, whose drug approval protocol is “the closest thing we have to a data-driven process on safety and efficacy,” he says.

Other questions concern how new technologies might be used in the “war on terror.” Currently, none would be feasible for an uncooperative detainee. But what if it became permissible to force a prisoner into being scanned?

International law protects prisoners of war against coercive interrogation, but other detainees—those a government classifies as enemy combatants—fall into a murkier category. International human rights law forbids torture and tactics that are cruel, inhuman or degrading, defined by the vague standard of whether a method would “shock the conscience.” Sean Thompson of the law firm Cravath, Swaine & Moore has argued in the Cornell Law Review that fMRI would likely pass the “shock the conscience” test if there were a strong governmental interest behind an interrogation. But Thompson says the technique is too extreme to be used on every detainee. He cites a U.S. Supreme Court decision that ruled out evidence obtained with “truth serum” because the drug “overbore” a person’s will. “A scan looks nicer and more clinical than your average coercive technique,” Thompson says, “but it begs the same moral question.”

The implications of any technology that monitors the brain go far beyond detecting lies. Brain scans are already used to study how people form trusting relationships, respond to political candidates and react to advertising. But just as the polygraph has turned out to be partially effective at best, it remains to be seen how good the new technologies will be in the real world—and how society will respond.

Dossier

The Polygraph and Lie Detection (National Academies Press, 2003). The definitive report on the history and shortcomings of the polygraph.

“A Cognitive Neurobiological Account of Deception: Evidence From Functional Neuroimaging,” by Sean Spence et al., Philosophical Transactions of the Royal Society, Series B, vol. 359, November 2004. A great overview of this science, including the psychology of deception.

“Telling Truth From Lie in Individual Subjects With Fast Event-Related fMRI,” by Daniel Langleben et al.,Human Brain Mapping, December 2005. The first paper to show how MRI can be used to detect deception in individuals.

Stay on the frontiers of medicine